Astronomy photographs can be challenging to process: they are often noisy, and convoluted with random signal. Additionally, there are a lot of faint details such as small stars, that can appear as few pixels wide. To overcome these problems, frame stacking software is used to combine the data contained in hundreds (possibly tens of thousands) of pictures. This complex process results in staggering photograph, even from amateur-grade hardware.

Milky Way, photo by Nathan Anderson from Silverthorne, United States

Milky Way, photo by Nathan Anderson from Silverthorne, United States

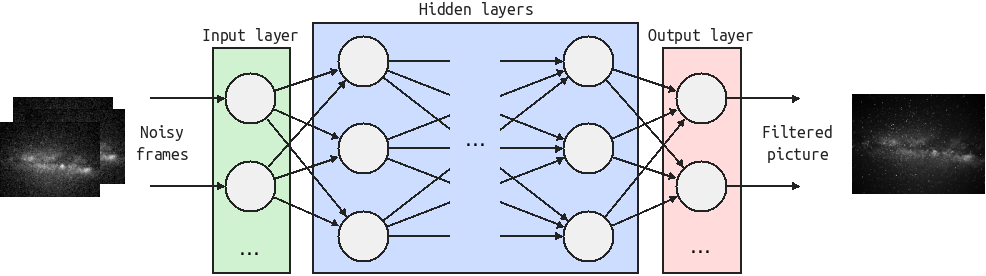

In a previous article, I used machine learning to do some basing digital signal processing experiments. This small project is a follow-up proof of concept. The objective is to define an artificial neural network (ANN) and train it with machine learning to take several noisy, convoluted astronomy pictures and output a single "clean" photograph.

Schematic of the neural network implemented in this article

Schematic of the neural network implemented in this article

Implementation

I focused on the two common types of picture defects:

- Noise: addition of a random signal to the picture,

- Blur: convolution between the picture and a kernel.

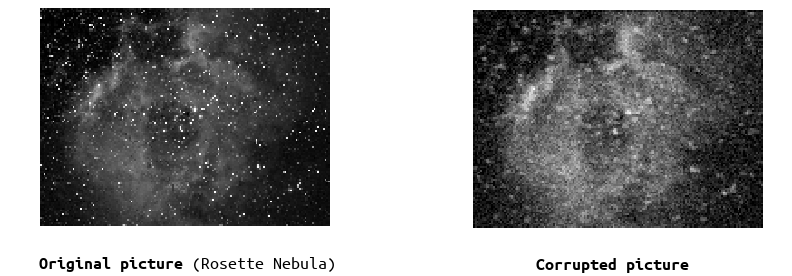

To get my training data set, I created a corruption function that takes pictures and generate altered frames with noise (normally distributed) and convolution (FFT convolution with a gaussian kernel of random parameters). To save computation time on my low power laptop, I had to reduce my input pictures to 160×120 pixels and convert them to greyscale.

The picture on the right side will be the input to my neural network, the objective being to reconstruct the left picture. To input more data, 8 corrupted frames are generated for each picture (in order to perform stacking). 8 is an arbitrary number, more is better.

For the machine learning, I used Python 3 with TensorFlow. TensorFlow is an open-source software library for machine learning applications such as neural networks, originally developed by researchers and engineers working on the Google Brain team.

The following figure is an export from my final neural network model.

>>> print(model.summary())

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 120, 160, 8) 0

_________________________________________________________________

dense_1 (Dense) (None, 120, 160, 8) 72

_________________________________________________________________

dense_2 (Dense) (None, 120, 160, 16) 144

_________________________________________________________________

dense_3 (Dense) (None, 120, 160, 16) 272

_________________________________________________________________

dense_4 (Dense) (None, 120, 160, 16) 272

_________________________________________________________________

conv2d_transpose_1 (Conv2DTr (None, 120, 160, 32) 2080

_________________________________________________________________

dense_5 (Dense) (None, 120, 160, 16) 528

_________________________________________________________________

conv2d_transpose_2 (Conv2DTr (None, 120, 160, 32) 2080

_________________________________________________________________

dense_6 (Dense) (None, 120, 160, 16) 528

_________________________________________________________________

dense_7 (Dense) (None, 120, 160, 16) 272

_________________________________________________________________

dense_8 (Dense) (None, 120, 160, 16) 272

_________________________________________________________________

dense_9 (Dense) (None, 120, 160, 16) 272

_________________________________________________________________

dense_10 (Dense) (None, 120, 160, 1) 17

=================================================================I used a simple mean square error (MSE) loss function with the Nadam optimizer. The code is on my Github: ml_frame_stacking.py at CGrassin/DSP_machine_learning.

Results

The network was trained on about 100 astronomy pictures. I used another input set to test the network's response.

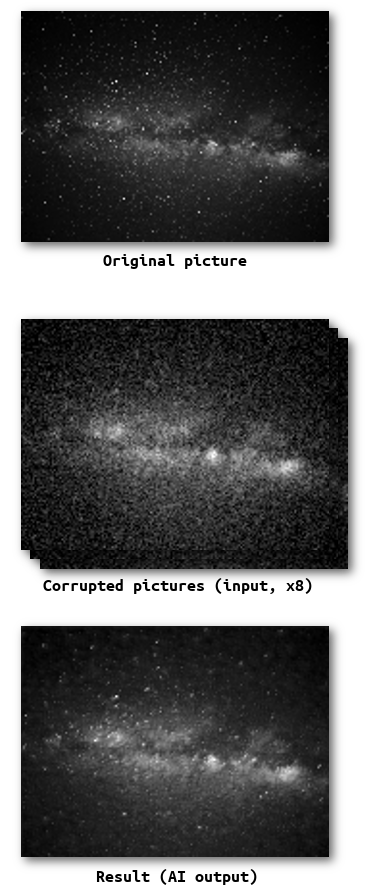

The following picture is our Milky Way. This picture is very affected by noise.

The faintest stars were lost in the repair attempt, but most of the information was sucessfully recovered! There are some noise artifacts and a bit of convolution left.

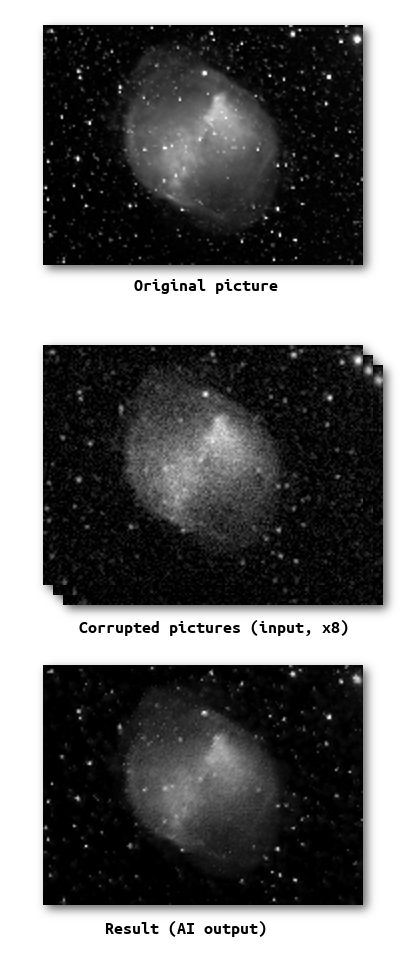

This second example is the Dumbbell Nebula (NGC 6853). Because nebulas are "blurry" by nature, this is a convenient hard test for the deconvolution part.

As expected, the result is not as sharp as the original picture simply because some of the information was lost in the convolution. However it did manage to improve it. This is especially noticable in the stars surounding the nebula.

Conclusion

Based on these results (and more), I consider this experiment to be a satisfying proof of concept. The improvements performed by the neural network are clearly noticable. Unfortunatly, due to my computer's limitation, training the ANN with colour pictures, higher resolution and more input frames is not practical. A lot more work and research would be required to turn this PoC into an efficient/user-friendly program.

I purposefully only trained the network on astronomy photographs (mostly star clusters, planets and nebulas). This has likely resulted on some over-fitting. This mean that my ANN may "know" what an astronomy picture usually looks like, and make some decisions based on this knowledge. In this particular case, I consider it acceptable since it is purpose-built for this task.

Author: Charles Grassin

What is on your mind?

Sorry, comments are temporarily disabled.

No comment yet!